Statistics are available on different area's on the platform. This article describes the statistics tab in a collection within library.

For every question in the collections we keep track on their performance.

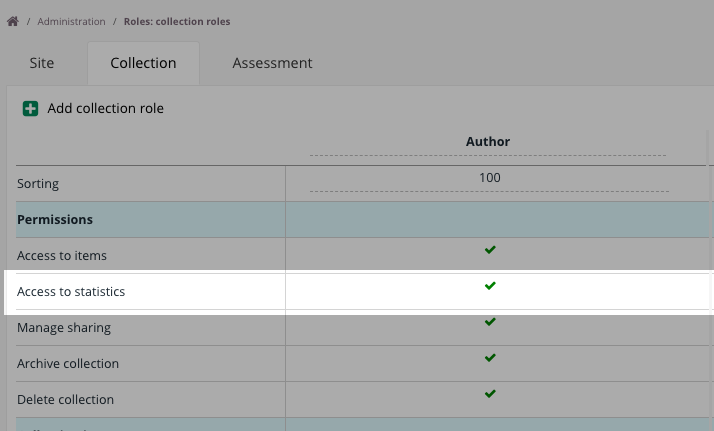

Access to statistics tab

In order to access the statitiscs tab, you'll need to have access to 'Roles > Collection > Access to statistics'

Statistics

In the statics you are able to find the following statistics.

Chance Score

There is a difference between the chance and a chance score. Consider for example a Multiple Choice question with 4 options. The chance in this case to pick the correct answer is 1 out of 4 or 25%). In this case the maximum score for the question is 1. This means that the chance score is 0.25 (25% from 1 points). If the question was worth 3 points, the chance score would be 25% of 3 = 0.75. For comparisons Cirrus always displays the Chance Score as a percentage of the maximum score for the question.

For Fill in the Blank, Hotspot, Sowiso, Numeric, Short answer, Essay and File response questions Chance score are not available]

P value

The difficulty value of the Item

The item-difficulty level is based on the P-value which in one of the core conepts in psychometrics. The difficulty is important for evaluating the characteristics of an item and whether it should continue to be a part of the assessment. In many cases, items are deleted if they are too easy or too hard. It also allows you to better understand how the items and test as a whole operate as a measurement instrument.

| Range | Interpretation | Notes |

|---|---|---|

| 0.0 - 0.3 | Hard | Test-takes are at the change level or even below, so your item might be to dificult or have other issues |

| 0.3-0.7 | Medium | 0.3-.05 > Items in this range will challenge even top test-takes 0.50-0.70 > These items are fairly common and bit tougher |

| 0.7-1.0 | Easy | These items are considered to be easy, the higher, the higher the P-Value the easier it is. |

Calculation of the P-value:

P = average score of all candidates / max score for that question for all question types

Pc value

The corrected Difficulty value for the Item

Also known as P' this is similar to the P-value but it takes the Chance score of the item into account.

Angoff

Angoff value described in following article

Only visible if activated

Used in

Indicates the number of assessments where the item is part of the assessment.

There are important distinctions based on the assessment type:

-

Manual Assessments

- The item is counted in Used In if it is included in the question selection and the form is random.

- If the item is in the question selection but the form is fixed and the item is not placed on the form, it is not counted in Used In.

-

Blueprint-Based Assessments

- The item is counted in Used In if its associated learning objective, taxonomy, or topic is included in the blueprint matrix.

Attempts

Shows the total number of times an item was answered by candidates across all assessments.

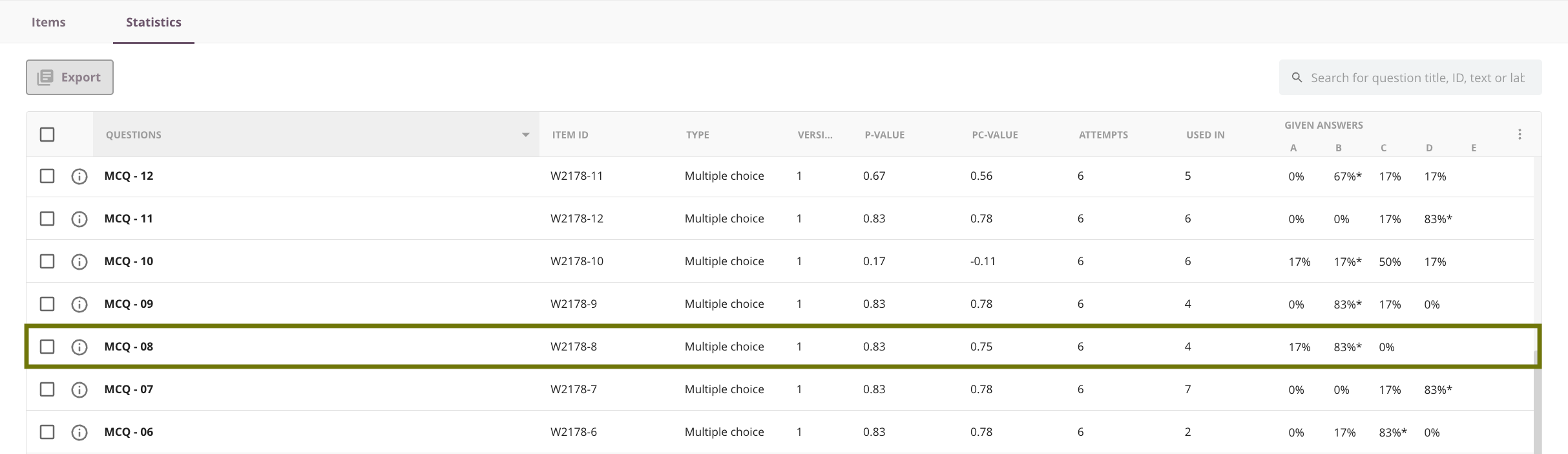

In the screenshot below, the item MCQ- 08 Version 1 is used in four assessments and has been attempted six times

Click the 'i' icon next to the item title to view all details of this question. Then, click the 'Used In' arrow to see which assessments the question is used in.

Answered

The last time it was answered.

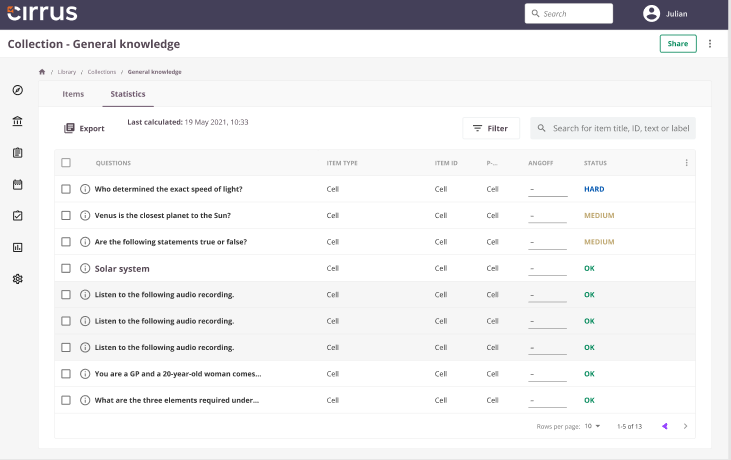

Please note, statistics will only update once the results is published

You can see at the top, the last moment the statistics were calculated

Given answers

Show the percentage of candidates that answered the question who choose a specific answer option, mostly applicable on questions with alternatives.

Status

Offers an indicator on the difficulty of an item (Hard, Medium, Easy), see more info via difficulty level threshold

When evaluating your items you can also take into account the Rit value within the context of an assessment. Also see our article "Reports in Cirrus: Assessment performance