Setting up a marking workflow by a Coordinator is done in 3 steps. This article explains step 1: Settings.

How to get here:

- Go to Marking > Coordinator tab

- This will open the Coordinator overview page

- Click on an exam that needs a marking workflow (status: Assessor needed)

Note: If you cannot see this, your role doesn't allow you to. Reach out to a System Admin of your organisation if you feel this is not correct.

Settings tab

The first step in setting up a marking workflow is completing the Settings tab. This page will guide you trough the options.

.png)

Follow the instructions below to get the meaning of all settings and how you can use it for your workflow.

1. Assessing type

One assessor

Each candidate (or question) will be assessed by one marker from the pool. There is one pair of eyes marking each script/question.

Two assessors

Each candidate (or question) will be assessed by two markers from the pool. There is two pair of eyes marking each script/question.

Scoring with Two assessors

Each assessor will have an equal voice in determining the marks. The score of two assessor will lead to one average score.

2. Allocation by

Lets you choose between allocation by Candidates or Items.

- Allocation per Candidate: The coordinator will assign assessors to candidates. This is also known as 'Vertical Marking'.

- Allocation per Items: The coordinator assigns items to candidates, This is also known as 'Horizontal Marking'.

3. Allocation of assessors

- Manual: The coordinator can assign markers/moderators per item or per candidate.

- Let Assessor decide: This option is giving marker(s)/moderator(s) the freedom to select items or candidates themselves. Once a marker starts working on a specific candidate/item the candidate/item will be fully assigned to this marker.

4. Sharing assessments between assessors

Checking this box, will open two options:

- Only if assessing same: marker will be able to view scores, comments and annotations of other markers only if user is assigned as an assessor/moderator for the same candidates/items.

- All candidates/items: marker will have access to all information from other markers/moderators.

Best practice: To ensure the highest level of impartiality while assessing, it is recommended to not share assessments when using '1. Assessing type: Two Assessors'.

5. Anonymous

- Anonymous candidate: this will hide the names of candidates. The candidate will be given a random number e.g. Anonymous 5

- Anonymous assessor: this ensures that the assessors will be hidden from each other. Both features can be used to ensure more impartial marking.

6. Manually score auto-scored questions

Check this box if you want to be able to overwrite the score of auto-scored questions.

Auto-scored questions are automatically marked and submitted by the system. Via this option a marker is allowed to overrule the score for these type of questions, although the system already pre-filled the score.

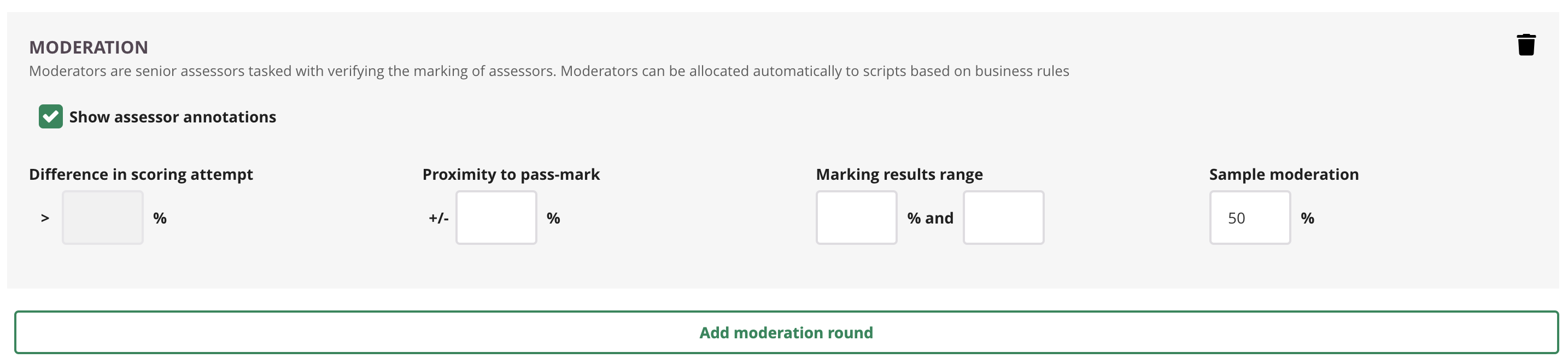

7. Add moderation round

Click on 'Add moderation round' to enable one or more moderation rounds for this schedule.

It's possible to setup 3 different moderation rounds with each their own moderation criteria.

Criteria to select scripts for moderation

Use one or more criteria to determine which scripts are eligible for moderation.

It is possible but not mandatory to combine criteria when setting up moderation. Leave the fields that you don't want to use empty.

1. Difference in scoring attempt

This option only applies to Assessing Type 'Two assessors'.

When each script is going to be assessed by two different assessors, you may expect their scores will be more or less the same.

If the score of the two assessors deviates too much, you can use this parameter to ensure a moderator reviews these scripts.

2. Using 'Proximity to pass-mark'

When a candidate score is very close to the pass/not passed boundary, it is considered best practice to have a moderator review and determine the final grade.

3. Marking result range

This option allows to specify a specific range for results that need to be checked by a moderator.

Coordinators can set this to 0-100% so a moderator will have to check all scripts. The moderator can review just a few and use 'Moderate multiple' to bulk accept the assessors' scores.

4. Sample moderation

Use sample moderation to let the system randomly pick scripts that will go to moderation.

Example: when you have 100 scripts and you set sample moderation on 20%, 20 randomly picked scripts will go to moderator.

Save and next step

Don't forget to click 'Save and next step' to move to the next step in the process: the 'Assessors-tab'.